Web Data Scraping Proxy – Yilu Proxy

Why does web scraping need proxy servers?

You're not the only one who can use proxies. Web scraping is an important and useful tool for many types of researchers, journalists, etc. It's also a good way to conduct private research without leaving any traces on your own computer or in your browser history.

Web scraping is like using a screwdriver to unscrew something from inside another object. You don't want that screwdriver back once you've finished with it. But if you do, then there are services out there where you can buy someone else's screwdrivers.

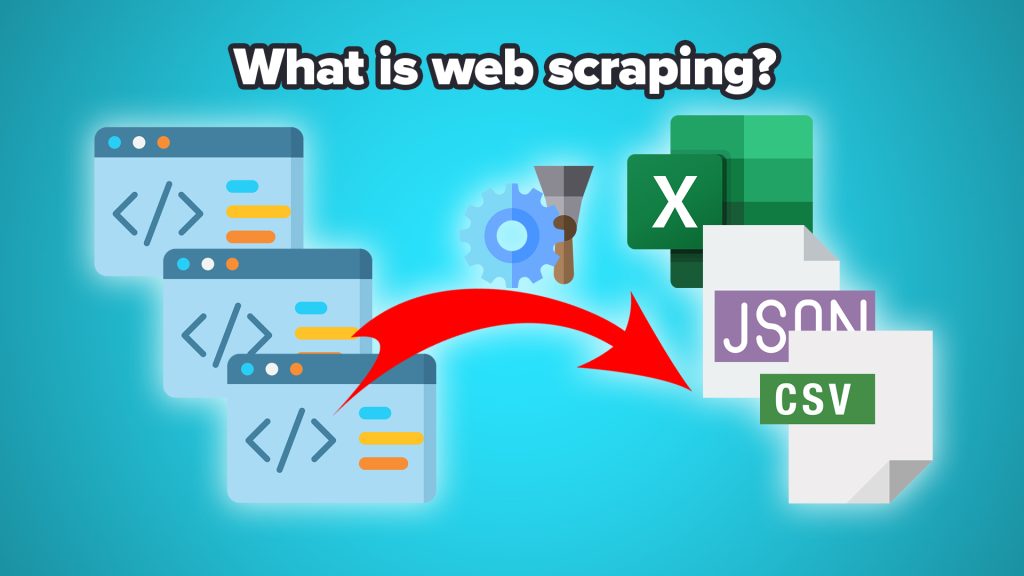

The internet contains countless billions of pages, each with their own unique content. A web crawler (a program that automatically tracks down and records all the links contained within those pages) can be used to collect this information; however, even with modern computers and advanced technology, collecting everything would take years.

Instead, web scraper programs can be designed to "scrape" certain subsets of websites based on keywords, search terms, or specific criteria. For example, a researcher may want to know what sorts of news articles have been published about a particular topic over the past decade. They could ask Google News to crawl through its archives and gather up every article containing their specified keywords. Then they'd use a web scraper program to extract any links contained within these articles and store them in a database. From there, they can analyze all the data and draw conclusions.

This process is often referred to as web scraping because it involves taking advantage of other people's websites without having to create your own. This type of web crawling isn't limited to just blogs and news sites either. Websites of all kinds can be scraped, including social media platforms such as Facebook and Twitter.

Your web browser can make requests directly to websites by typing in URLs. However, if you find yourself wanting to access more than one website at a time, or want to store and organize all the information you're gathering, then you'll need to use a proxy server (an intermediary between your computer and the internet). A scraping proxy server exists solely to facilitate communication between your computer and the internet.

It receives requests from your browser, finds an existing connection to the target website, and forwards it along. Afterward, the proxy server returns a response to your browser, which instructs it to go ahead and make further requests.

A Scraping Proxy servers are commonly used today for various purposes. Some are intended specifically for anonymous browsing and hiding IP addresses. Others offer extra security and privacy by encrypting all incoming and outgoing traffic. Still others allow users to bypass restrictions placed on certain websites. In some cases, a scraping proxy servers can even be used to block tracking software that collects personal data from your web activity.

A scraping proxy server has a fixed public IP address, whereas your browser can have any number of different IP addresses assigned to it whenever you connect to the internet. Anonymity can be granted simply by changing your proxy server to one that will accept your requests.

However, you should be aware that proxy servers are not always 100% reliable. If you don't pay close attention to how the proxy is configured, then it may end up leaking your location and personal information.

Also, while most proxy servers are free to use, they can still cost money when bandwidth is involved.

In conclusion, web scraping proxy might seem like a trivial matter, but it's actually one of the most powerful tools in any information-gathering arsenal.